Jul 14, 2024 02:43 PM IST

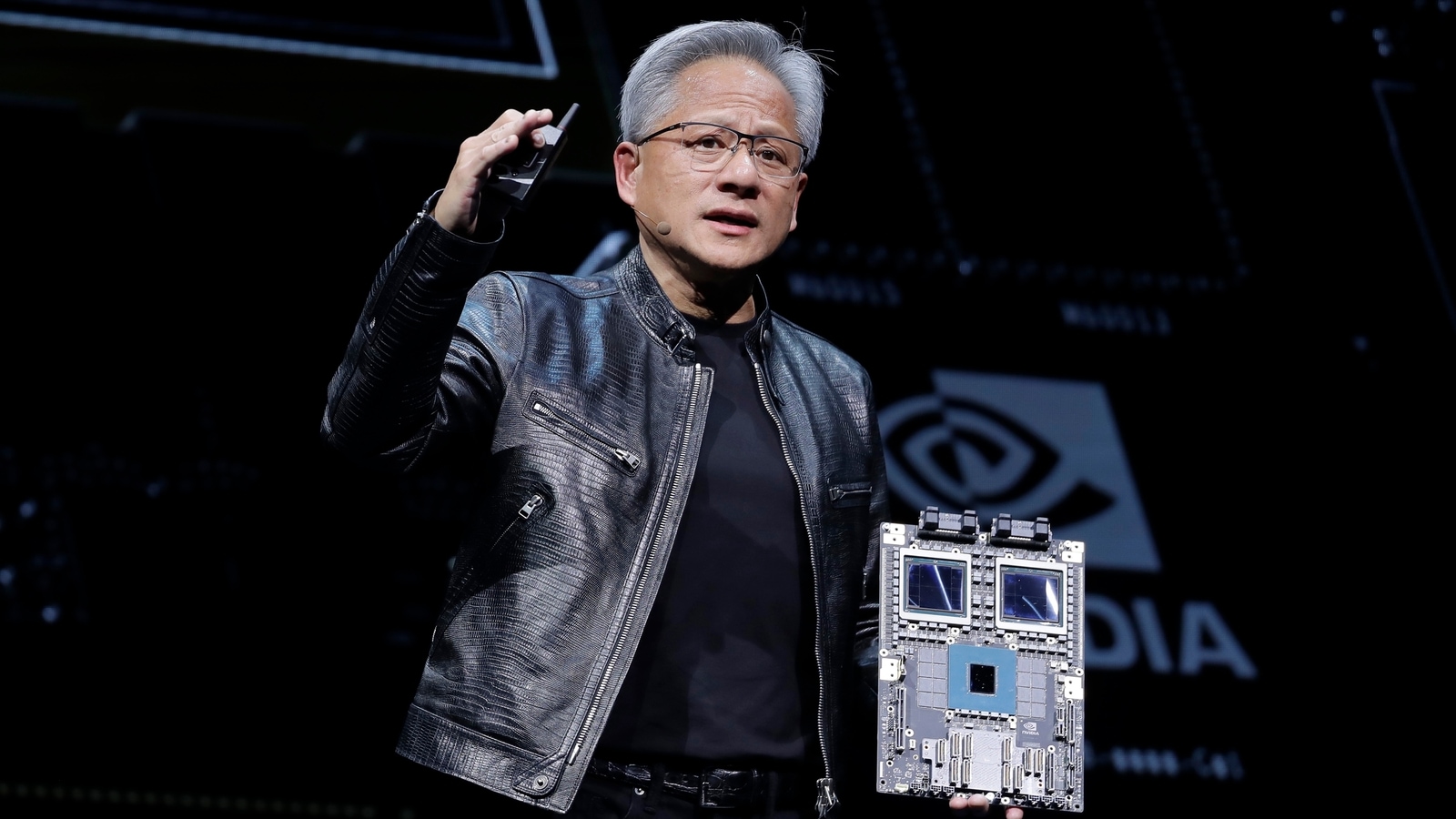

Nvidia, the biggest beneficiary of the AI boom enjoys close to a monopoly when it comes to making chips for powerful generative AI models like OpenAI’s ChatGPT.

Nvidia could be worth almost $50 trillion in a decade which is more than the combined market value of the entire S&P 500 ($47 trillion), Financial Times reported, quoting James Anderson, one of the most successful tech investors, known for his early bets on Tesla and Amazons.

Why is the market sentiment for Nvidia so positive?

Nvidia has been the biggest beneficiary of the AI boom as it has close to a monopoly, when it comes to making chips used for training and running powerful generative AI models like OpenAI’s ChatGPT, for instance.

Also Read: Bitcoin hits $60,000 and other cryptocurrencies gain as Trump shooting boosts his re-election odds

Nvidia shares surged 162% this year, with its market cap going above $3 trillion. This is 20 times the value of the chipmaking giant back in 2018 when it was worth $150 million. It even briefly surpassed Microsoft and Apple this June to become the world’s most valuable listed company.

Who is James Anderson?

Anderson was best known for his four-decade work at Baillie Gifford, a Scottish investment firm which bought Nvidia stocks in 2016 and eventually became a star of tech investing, according to the report, which added that he teamed up last year with Italy’s billionaire Agnelli family to launch Lingotto Investment Management, with him running a $650 billion fund. The fund’s largest investment is of course, Nvidia.

Also Read: Mercedes-Benz looks to assemble more EVs in India: MD and CEO Santosh Iyer

Anderson believes that AI chip demand is growing at about 60% a year. He said that this growth over a period of 10 years would translate into $1,350 of earnings per Nvidia share and a market capitalisation of $49 trillion. He believes that the probability of this happening is 10-15%.

What are Nvidia’s future goals?

Cloud service providers see a high return on investment if they use Nvidia GPUs, Firstpost quoted Ian Buck, the vice president and general manager of Nvidia’s hyperscale and HPC business as saying during the Bank of America Securities 2024 Global Technology Conference.

For every dollar spent on GPUs, cloud providers can make five dollars over a period of four years, he said. For AI inferencing tasks, the profitability is seven dolars for each dollar invested.

Also Read: Elon Musk’s X accused of violating EU digital laws, Musk says EU offered illegal secret deal

AI inferencing is the process a trained AI model uses to make conclusions out of fresh data it is fed. Nvidia is addressing the demand for this through products like the NVIDIA Inference Microservices (NIMs), which supports popular AI models like Llama, Mistral, and Gemma, according to the report.

The company is also focusing on its new Blackwell GPU, used for performing inference tasks, but by using lesser energy. The company is also slated to release its Rubin GPU for cloud providers, with the significance her being data centre infrastructural development that is needed to support the AI revolution.